There are basically three types of SEO available – on-page SEO, off-page SEO, and technical SEO. The on-page SEO deals with content optimization by making it more relevant for the users whereas the off-page SEO deals with website promotion by linking building. Technical SEO is the third pillar that is solely aimed at better crawling and indexing by the search engines. It is concerned with helping the search engine crawlers to access your website easily, crawl faster, interpret perfectly and index higher. The word technical signifies the effort of optimizing the infrastructure of the website. The following is the list of the best practices for technical SEO to boost your website’s ranking by allowing proper crawling and indexing.

Select A Fixed Domain –

You might have observed that a website is accessible with or without typing www in front of its domain name. While this facility is applicable for the internet users only, the search engine crawlers can consider your domain name with www and without www as two different websites. This will lead to duplicate content issues and therefore, there will be indexing issues and loss of page rank quite easily.

Therefore, you have to inform the search engine crawlers about your preferred domain name so that the crawlers ignore the other one and there is no duplicate content or indexing issue. To set it, you need to sign up on Google Webmaster Tools and under Site Settings, you will get the option. If you are using a CMS like WordPress, you can do it directly from General Settings.

Optimizing URL Structure –

Confusing and lack of SEO-friendly URL structures can be the reason why your content end up never getting indexed. The best practices are to use lowercase throughout the URL of any webpage, use a hyphen to separate words and especially keywords, make the URLs short but understandable for the crawlers, avoid using unnecessary characters or words like articles, and you must include the main keywords in the URL on which the article is based on. However, you should not do keyword stuffing. It should have a few keywords but even the users can understand the subject of the content by reading the URL. Besides, the format of the URL has to be your domain name followed by the webpage URL without no date or time in between as some people do.

Work On Navigation –

Technical SEO is more concerned with the structure of your website and navigation is the most important part of it. A user will prefer to visit a website where the overall structure is minimalist and where they can find anything they are looking for intuitively and effortlessly. In fact, a clean structure helps the crawlers to understand the content as well. But most of the website owners put too much effort to increase the conversion rate of the website and forget about the site structure.

This is how they hamper the SEO of the site and regret in the long run. Instead of having one category page, you should have multiple to explain clearly what the categories stand for and associate them with the archive pages. Minimize the unnecessary things that users will not be interested and do not have too many components like ads, pop-ups, and such irritating things. Keep them to minimal and make sure that they work as you expected. You can experiment and find the right spot to place ads instead of having them everywhere. Showing related posts will increase the engagement. Apart from that, instead of showing all posts in one page, you can add breadcrumb.

Meta Description and Tags –

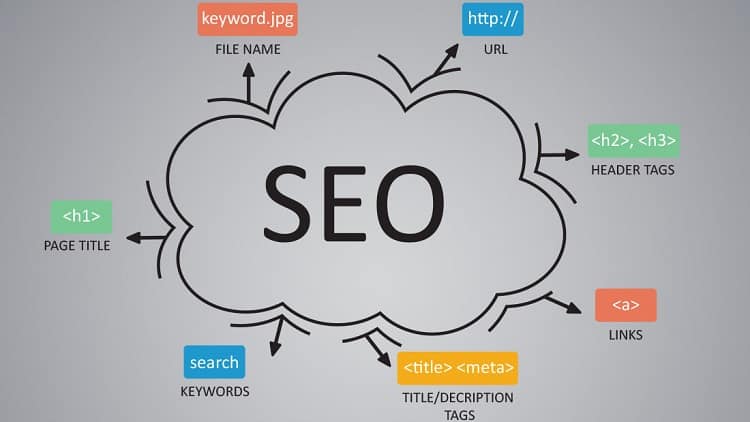

For making the search engine crawlers to understand your posts and web pages better, you should use tags and write meta descriptions. These are the two ingredients that most of the website owners miss out when they are so much important. The tags should be the keywords as well as those words or phrases by which the users are going to search on the search engines. Similarly, the meta description should have keywords and it must be simple and easy for the users as well as for the crawlers to understand what your webpage is all about.

Apart from these, you need to optimize the XML sitemap. It lists all the pages and posts you have on your website. Search engine crawlers use it to find out the latest postings and crawl accordingly. Make sure that the sitemap gets updated as soon as you publish a new post. You can also exclude those pages that do not have anything relevant for the users. Submit the sitemap in different search engine for quick indexing. Along with that, you need to check Robots.txt and make sure that there is no blockage. Besides, add SSL to make your website trustable by getting HTTPS. If you need, you can hire a technical SEO expert to check your website and get recommendations for improvement.